What is a HGX server?

HGX is a design framework created by NVIDIA. An HGX server is a system that adheres to the NVIDIA HGX reference architecture, specifically structured to offer a versatile and scalable platform tailored for data centers managing demanding AI and HPC applications. It enables the integration of NVIDIA GPUs and other hardware components.

What are the 7 best key features of an HGX server?

- NVIDIA GPU support

The primary purpose of an HGX server is to support NVIDIA GPUs, which are widely used for AI and HPC workloads. The server is designed to accommodate multiple GPUs for parallel processing tasks. - NVLink Interconnect

HGX servers may incorporate NVIDIA's NVLink technology, a high-speed interconnect that enables fast communication between GPUs. NVLink enhances data transfer rates and enables improved performance for parallel computing workloads. - Modular design

This server typically features a modular design that allows for flexibility in configuring hardware components. This modularity enables data centers to customize the server based on their specific requirements, including the type and number of GPUs. - Scalability

Designed to be scalable, HGX servers allow data centers to build powerful computing clusters by connecting multiple HGX-based servers. This scalability is crucial for handling the increasing demands of AI and HPC applications. - Industry standardization

HGX is designed as a reference architecture, aiming for industry standardization. This means that the design is provided by NVIDIA for collaboration with various hardware vendors. Partners, such as 2CRSi, can implement the HGX design. - Software compatibility

HGX servers are intended to be compatible with various software frameworks and tools used in the AI and HPC fields. This ensures that the servers can integrate into existing workflows and support a wide range of applications. - Versatility in applications

The modular design and compatibility with different GPUs make HGX servers versatile, capable of supporting a variety of workloads ranging from deep learning and AI training to scientific simulations and data analytics.

HGX servers for AI

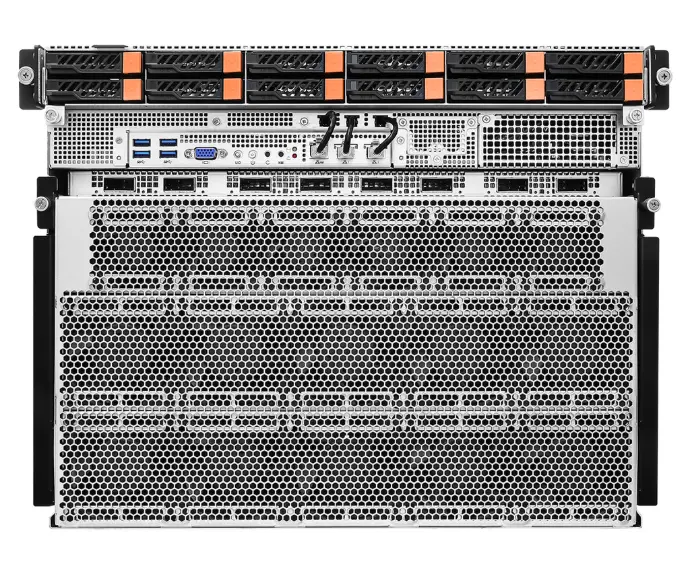

Godì 1.8E2D-NV8

8U 19"

The excellence of AI Supercomputing Platform based

on Intel® Xeon® 6th generation and 8 NVIDIA B300 SXM6.

Godì 1.8 ER-NV8

7U 19"

AI Supercomputing Platform based

on 2x Intel® Xeon® 5th generation and

8x NVIDIA

H200 SXM5.

HGX server, based on SXM platform

Our HGX servers, the Godì 1.8 range, are based on the latest SXM platform.

PCIe vs. SXM

NVIDIA H200/B200/B300 GPUs come in two form factors: PCIe and SXM.

The SXM platform is a GPU form factor and interconnect designed for data centers and AI supercomputing. Unlike standard PCIe GPUs, SXM modules are directly mounted on a baseboard and connected through NVLink, enabling much higher bandwidth, lower latency, and greater power delivery.

This design allows GPUs to communicate more efficiently with each other and with CPUs.

Moreover, replacing or upgrading PCIe GPUs is rather simple, as it involves only the removal of the GPU. In contrast, SXM GPUs are attached to the chassis using thermal paste, making replacement trickier.

However, owing to NVSwitch, SXM GPUs deliver superior performance compared to PCIe GPUs, rendering them well-suited for data-intensive workloads across multiple nodes.