NVIDIA launches its brand-new H200 GPU for AI!

NVIDIA H200 GPU elevates your computing power

The next leap in GPU technology is here, and it’s redefining the landscape of AI, high-performance computing, and data analytics. The NVIDIA H200 GPU is designed to deliver unprecedented levels of performance, efficiency, and scalability, setting a new benchmark for the industry.

H200 GPU: for which applications?

NVIDIA is leading the AI industry thanks to its GPU technology with the A100, H100 and now the brand-new version: the H200. Tailored for Large Language Models, these GPUs are launching end of 2024 and are promising to revolutionize every industry.

They can train complex neural networks faster and with more accuracy, run simulations, scientific computing and any other applications demanding extreme computational power.

Industry examples

Finance

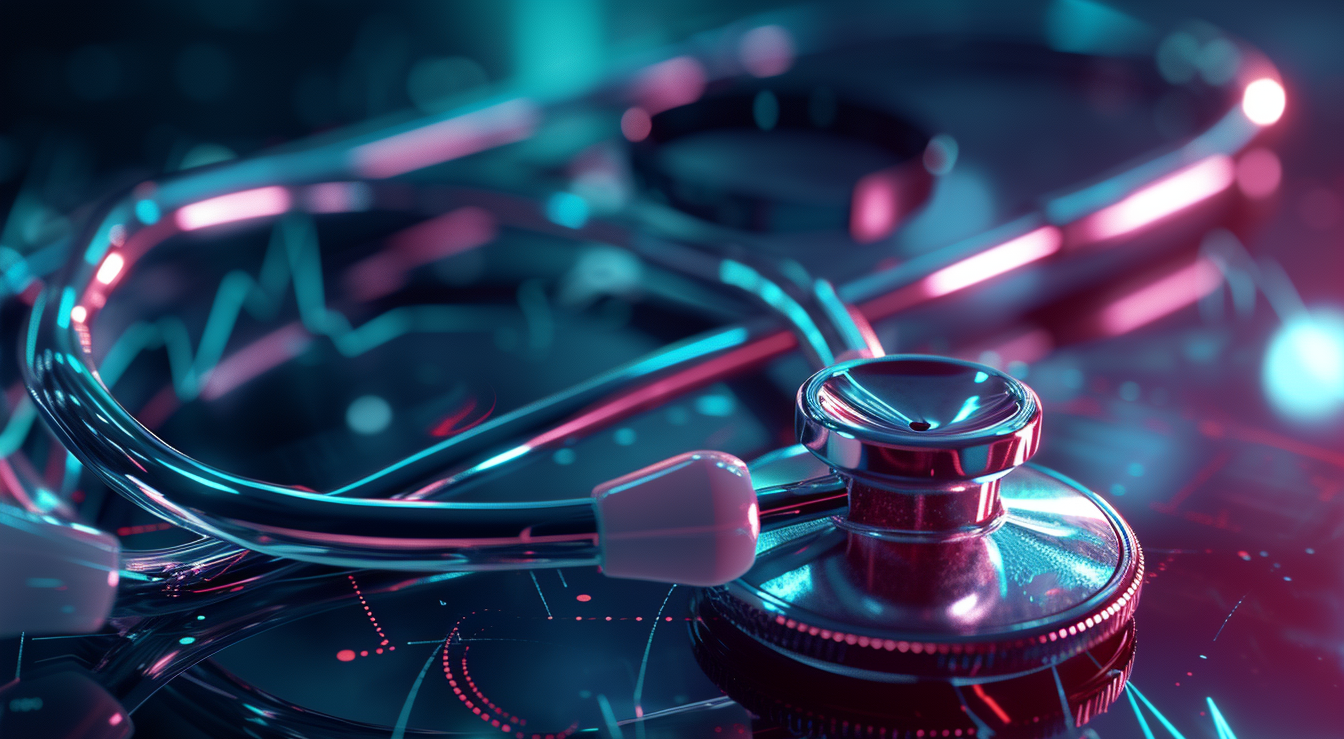

Healthcare

Retail

Telecommunications

Media & Entertainment

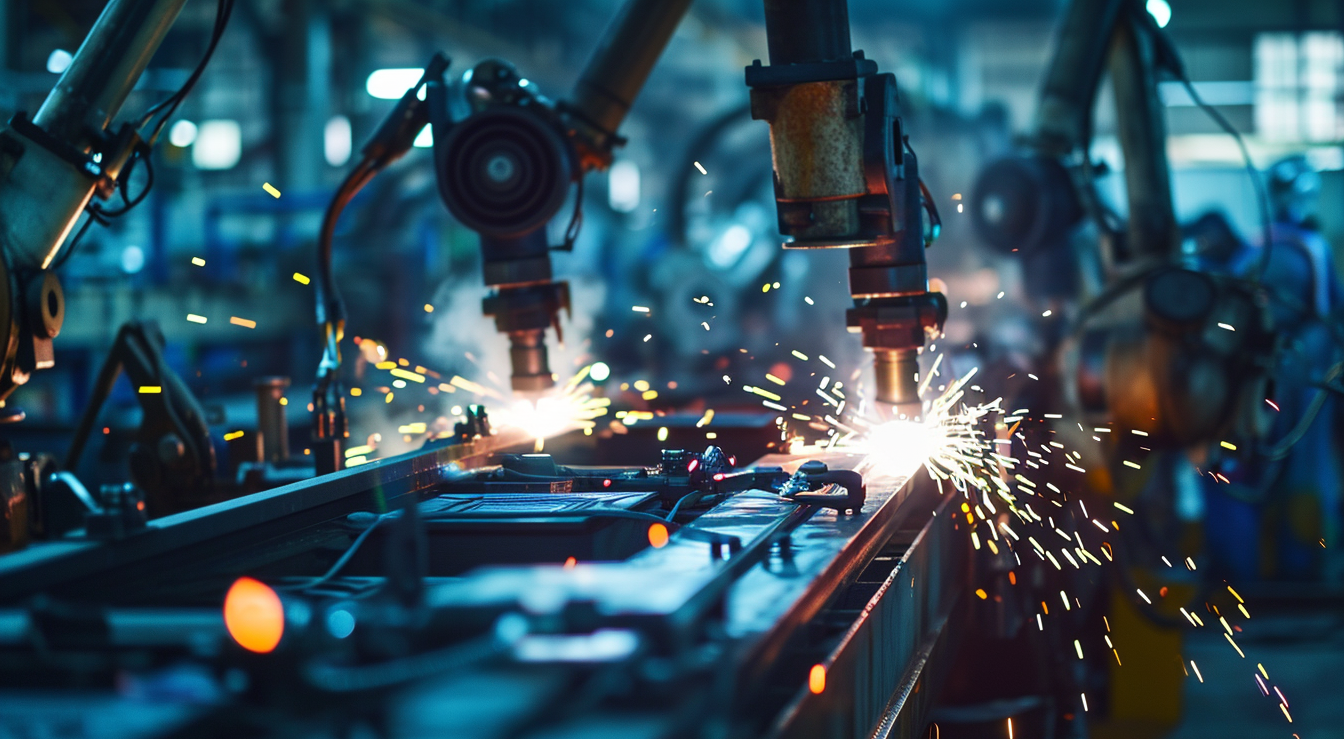

Manufacturing

Energy

How the H200 outperforms the H100

The NVIDIA H200 is not just an upgrade; it's a revolution. Here’s how it outshines its predecessor, the H100:

Performance

With up to 30% more CUDA cores and improved Tensor Core technology, the H200 provides a substantial performance boost over the H100.

Bandwidth

The H200’s 141GB of HBM3e memory offers significantly higher bandwidth than the H100, which allows for faster data processing and the ability to handle more extensive complex datasets.

Power Efficiency

Despite its increased power, the H200 is designed with advanced power management technologies, making it more energy-efficient and reduces operational costs and environmental impact.

Scalability

The latest NVLink technology in the H200 allows for even more efficient multi-GPU setups. It makes it easier for companies to scale up computing power as the needs grow.

Server HGX 8x H200 SXM5

HGX H200 AI server: the Godì 1.8ER-NV8

6U 19"

The excellence of AI Supercomputing Platform based

on Intel® Xeon® 5th generation and

NVIDIA HGX with 8x H200 SXM5!

NVIDIA H200 GPU Technical Specifications

The NVIDIA H200 is more than just a GPU; it’s a powerful tool designed to push the boundaries of what’s possible in AI, HPC, and beyond. With its unmatched performance, advanced features, and broad application range, the H200 is the ideal choice for professionals and researchers who require the best.

| |

H200 SXM

|

H200 NVL

|

|

FP64

|

34 TFLOPS | 34 TFLOPS |

| FP64 Tensor Core | 67 TFLOPS | 67 TFLOPS |

| FP32 | 67 TFLOPS | 67 TFLOPS |

| TF32 Tensor Core | 989 TFLOPS | 989 TFLOPS |

| BFLOAT16 Tensor Core | 1,979 TFLOPS | 1,979 TFLOPS |

| FP16 Tensor Core | 1,979 TFLOPS | 1,979 TFLOPS |

| FP8 Tensor Core | 3,958 TFLOPS | 3,958 TFLOPS |

| INT8 Tensor Core | 3,958 TFLOPS | 3,958 TFLOPS |

| GPU Memory | 141GB |

141GB |

| GPU Memory Bandwidth | 4.8TB/s |

4.8TB/s |

| Decoders | 7 NVDEC 7 JPEG |

7 NVDEC 7 JPEG |

| Confidential Computing | Supported | Supported |

| Max Thermal Design Power (TDP) | Up to 700W (configurable) | Up to 600W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGs @16.5GB each |

Up to 7 MIGs @16.5GB each |

| Form Factor | SXM | PCIe |

| Interconnect | NVIDIA NVLink™: 900GB/s PCIe Gen5: 128GB/s |

2- or 4-way NVIDIA NVLink bridge: 900GB/s PCIe Gen5: 128GB/s |

| Server Options | NVIDIA HGX™H200 partner and NVIDIA- Certified Systems™with 4 or 8 GPUs |

NVIDIA MGX™H200 NVL partner and NVIDIA-Certified Systems with up to 8 GPUs |

| NVIDIA AI Enterprise | Add-on | Included |

Interested? Get in touch with our sales team! We have servers and configurations available now.

2CRSi is NVIDIA Elite Partner.