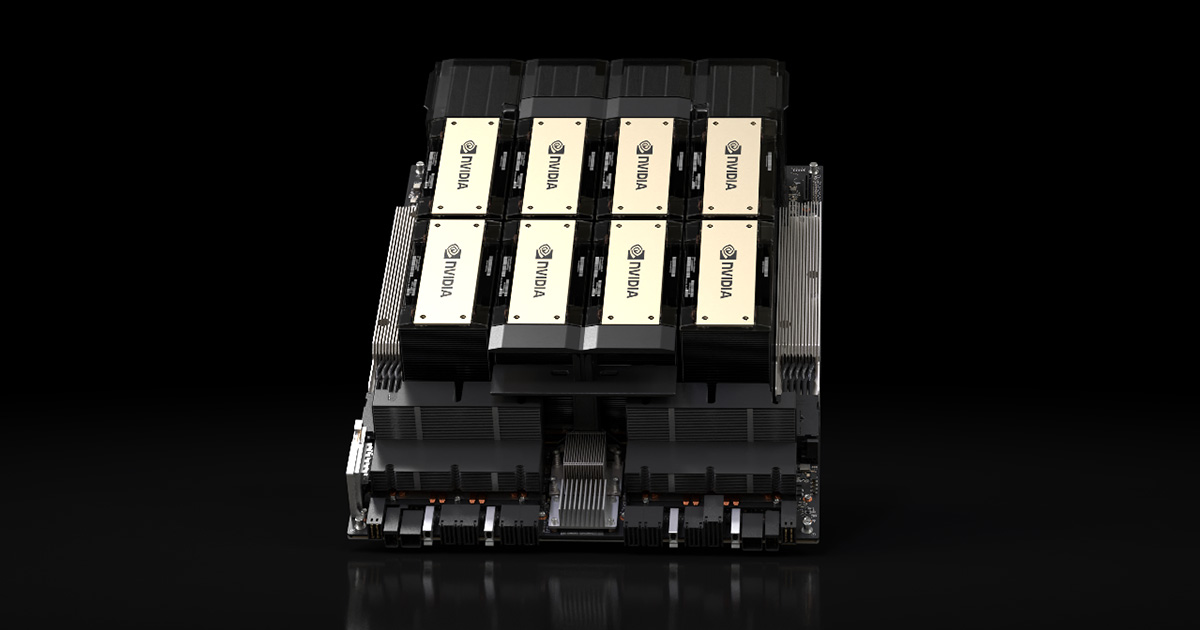

HGX™ Server powered by 8x H200 SXM5 GPUs

Get ahead of the competition!

A complete powerhouse AI server with 8x H200 SXM5 GPUs

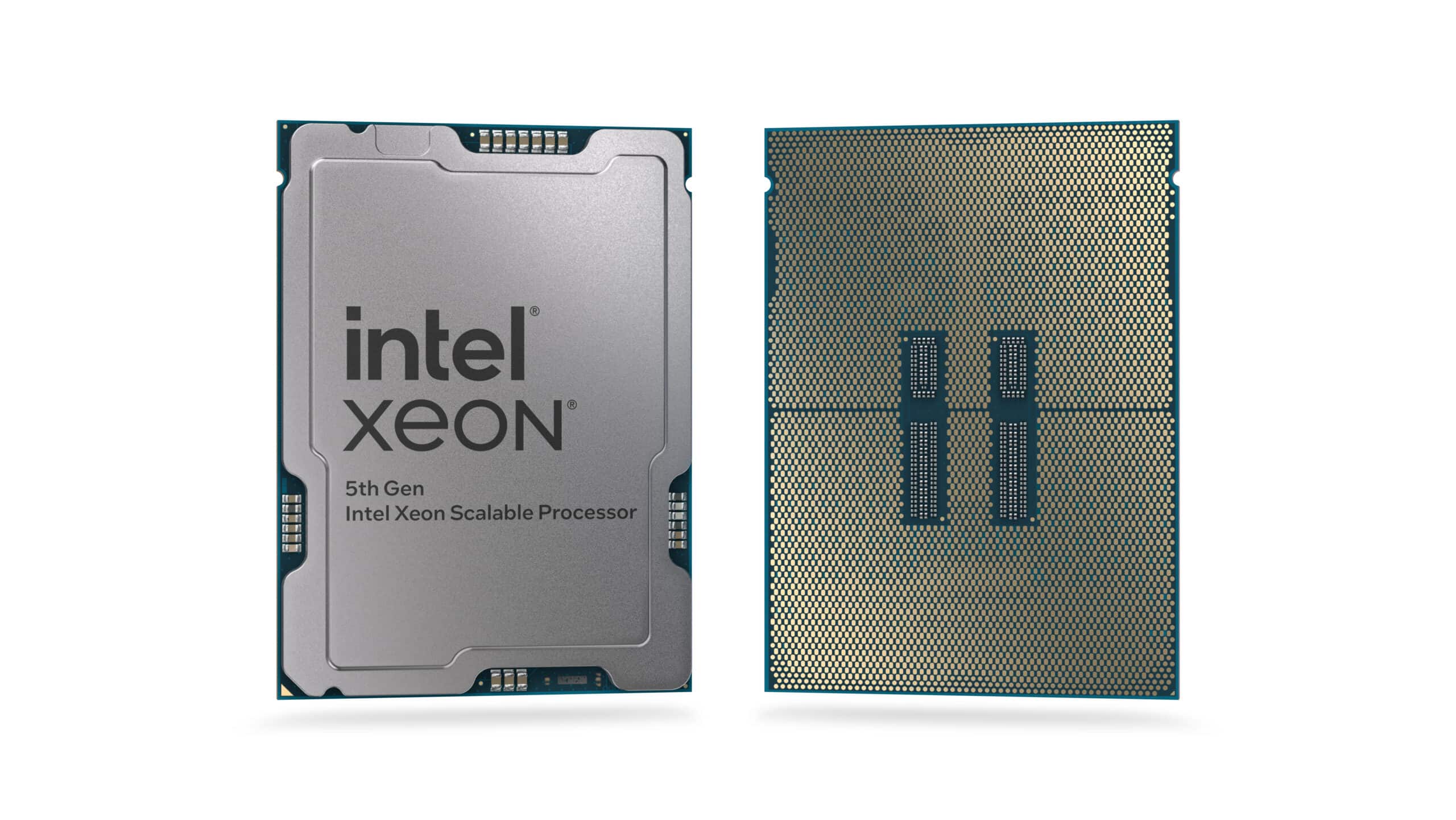

Meet our latest H200 server, powered by an HGX™ platform with 8 H200 SXM5 GPUs and 2 Intel® Xeon® Scalable 5th Generation.

This H200 server is designed for the AI experts in search for power, quickness, and reliability.

Order now to be ahead of the competition with your H200 server!

Godì 1.8 ER-NV8: H200 server

19"

Based

on Intel® Xeon® 5th generation and NVIDIA SXM5 with 8 H200 141GB

!

Get in touch

Attention: NVIDIA H200 GPUs are under embargo from the United States Department of Commerce towards multiple countries across the world. 2CRSi group and all its subsidiaries are following US trade legislation and will ensure through compliance process is complete before all shipments of its GPU systems. Feel free to ask any question regarding the compliance process to your 2CRSi Sales agent or contacting the U.S. Department of Commerce.

HGX™ 8 NVIDIA® H200 141GB

With current models exceeding 175 billion parameters, the H200 SXM5 server with 8 GPUs utilizes Transformer Engine, NVLink, and 1 128GB HBM3e memory to provide optimum performance and easy scaling across any data center, bringing LLMs to the mainstream.

| GPU Memory |

141GB |

| GPU Memory Bandwidth |

4.8TB/s |

| FP8 Tensor Core Performance |

4 PetaFLOPS |

| Form Factor |

SXM |

Dual CPU socket for Intel® Xeon® Scalable 5th Gen

AI workloads are extremely demanding.

Our HGX H200 server, the GodÌ 1.8ER-NV8, harness the power of state-of-the-art processing of the latest generation of Intel® Xeon® Scalable processors, made possible through the utilization of the 5nm manufacturing process.

This harmonious combination of exceptional hardware and cutting-edge manufacturing technology enhances your overall experience, delivering superior performance, reliability and efficiency.

DDR5 & PCIe Gen 5 ready

DDR5 offers higher data transfer rates and increased bandwidth for improved system responsiveness and energy efficiency.

PCIe Gen 5 doubles the maximum theoretical data transfer rate per lways,

In combination, DDR5 and PCIe Gen 5 deliver a powerful synergy that boosts overall system performance, responsiveness, and energy efficiency, catering to the ever-increasing demands of modern computing as AI.

NVIDIA H200 vs H100

Launched in 2023, the H100 GPUs were quickly out of stock as they were the best GPUs for AI workloads on the market. In 2024, NVIDIA improves and launches the upgraded version: the H200.

In which ways the H200 GPU are even more powerful than its predecessor, the H100?

Performance

H100 GPU: holds the title of the best graphics card on the market, tailored for heavy and intensive AI workloads.

H200 GPU: this graphic cards improves performance significantly for LLMs with up to 2 times faster inference compared to the H100 thanks to the increase in memory capacity.

Memory & bandwidth

H100 GPU: features a 80GB of HBM3 memory and provides a bandwidth of around 3.35 TB/S.

H200 GPU: features 141GB of HBM3e memory and a bandwidth of 4.8 TB/S, which improves data handling capabilities.

Architectural improvements

H100 GPU: based on the Hopper architecture and uses HBM3 memory.

H200 GPU: also based on the Hopper architecture, but is the first GPU to feature HBM3e memory.

Energy efficiency & cost

H100 GPU: already known for its energy efficiency.

H200 GPU: is expected to offer better energy efficiency and lower TCO due to the advanced memory and bandwidth capabilities.

The H200 GPU builds on the strengths of the H100 and offers significant improvements in memory capacity and bandwidth, which translates to better performance for data-intensive tasks.

Its compatibility with existing systems makes it an attractive upgrade for organizations looking to enhance their AI and HPC capabilities.